We have 3 color channels: RGB. First align green channel in reference to be, then r in reference to b and call np.dstack(.) to concatenate the three color channels to get a colored image. To do so, we can check similarity by some metric and choose the translation that maximizes the similarity between two color channels The simples metric is to minimize over Euclidean distance between two images $$\text{argmin}_{i, j \in [-15, 15] \times [-15, 15]}\Sigma(im1_{i, j} - im2_{i, j})^2$$. There are cleverer techniques but this is a baseline. Simple alignment with no translation results in an image where colors are clearly misaligned. We can just try to minimize over possible translation grid $$[-15, 15] \times [-15, 15]$$ as described in the equations (I then changed this to $[-20, 20] \times [-20, 20]$ for high-res images and redid the low-res ones in this search space too). The "naive" approach function I implemented for this basically consists of two arguments, im1 and im2 (in this case im2 is always the blue color channels, which is the reference for alignment), a baseline shift = (0, 0), two for loops to iterate over the possible translation space, and saving the best translation the algorithm comes across with Euclidean metric. Although I expected it to be a good colored image it was kind of fuzzy. This was probably because the images are unmasked and contain sides that affect the overall colors with noise. To circumvent this, I cropped all sides by 10 percent, which helped reducing the noise. In the search for a better metric, I found normalized_mutual_information under metrics of skimage package. Very informally, the mutual information between two stochastic variables $$X, Y$$ is defined as $$I(X; Y) = H(X) + H(Y) - H(X, Y)$$, where $$H$$ stands for entropy of the density. Also entropy for a random variable with density $p$ is defined as $$-\Sigma_{x} p(x) \log p(x)$$. and since $$p(x) \in [0, 1] \forall x$$ and log convex and entropy is a convex combination of logs, this is a PSD convex function that is maximized at 1. From this, we can infer from the definition of mutual information, for fixed marginals $$p_x$$ and $$p_y$$, we should try to maximize the mutual information. That's because we are viewing images as stochastic variables with noise, a statistical dependence between two images would increase "randomness", which is not something we want because randomness in this context means noise, which create misaligned images in terms of colors. Basically there should be no stochastic dependence between two as one image should tell most of the information about the image (the structure of the images are nearly identical except for the lens that they were taken with). This is a sufficient statistic. So basically, I changed the Euclidean distance metric to normalized mutual information, which resulted in better resolution images available below.

shift: (5, 2), (12, 3), time: 22.7s

shift: (-3, 2), (3, 2), time: 21.2s

shift: (3, 3), (6, 3), time: 20.8s

For high resolution images, exhaustive search becomes computationally expensive as the search soace grows quadratically. An $$[-15, 15] \times [-15, 15]$$ would require 225 computationally expensive vector operations, which is expensive in terms of computation. Course-to-fine approach is the goal of image pyramids. They start with a low-res version of the image (down sampled, or rescaled in my case) of the image to find approximate alignment on the low-res image, then refine at higher resolutions with smaller search spaces. As you can see, these are hyper-parameters that I had to choose during development. The recursive algorithm I developed recursively downsampled the image by 2 until the dimensions of both axes were smaller that 300. On the base case, I ran the naive algorithm on the lowest resolution image, which results in a shift of (i, j). This is a bottom up recursive approach, so on the higher level of the recursive stack, we have a image twice the size of the current image, so the shift should be multiplied by 2 as well (2i, 2j) and (2i, 2j) is the start of the search at the higher level. And basically from one level above of the base level, I created the same search function for higher resolution images with a way smaller search space ($$[-2, 2] \times [-2, 2]$$ was the most time efficient with best resolution images) and ran the exhaustive search on a smaller search space than before with the same normalized mutual information metric. I repeated this for all levels recursively, until this bottom up recursive solution came back to upper most level with a good alignment. Apart from a python misconception (basically I was trying to multiply the shift tuple by two at higher levels of recursion but apparently it appends a copy to the tuple of the same tuple), I didn't really come across a big challange. I also processed the initially cropped images for noise reduction. However, at the end, the top and left sides of most images had color misalignment. In order to fix that, I cropped 10 percent from the top and left sides after processing with this recursive pyramid approach. Under the images, the caption reads the shift required for green to align to blue and red to align to blue.

shift: (25, 4), (59, -4), time: 45.7s

shift: (49, 23), (106, 40), time: 46.8s

shift: (59, 17), (123, 14), time: 48.9s

shift: (41, 17), (89, 23), time: 48.5s

shift: (54, 9), (116, 12), time: 47.5s

shift: (81, 10), (177, 13), time: 48.4s

shift: (51, 26), (108, 36), time: 46.3s

shift: (78, 29), (176, 37), time: 48.8s

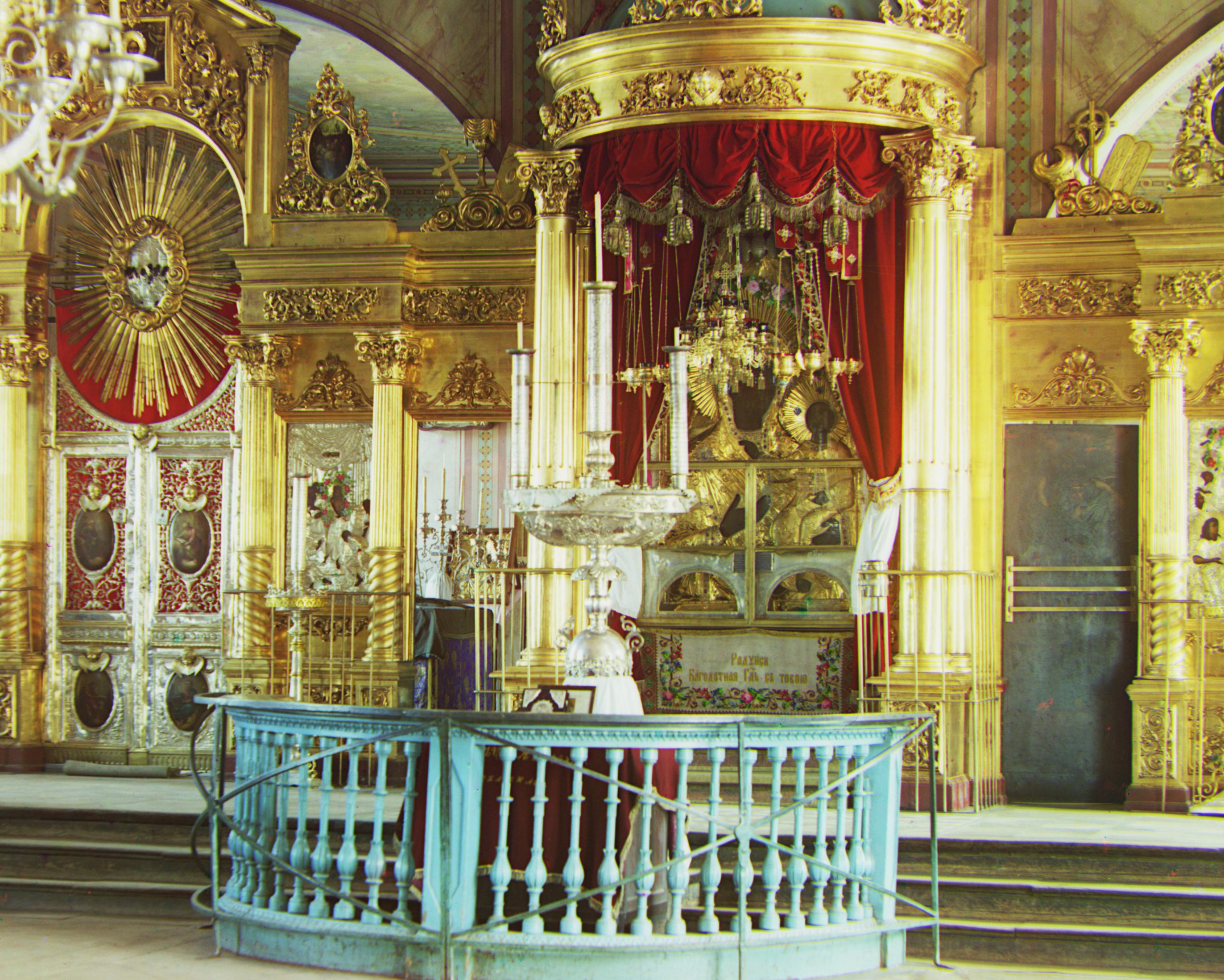

shift: (53, 15), (112, 11), time: 46.8s

shift: (42, 6), (86, 32), time: 45.8s (no extra cropping for this image)

Back to main page